The Assumption of Randomization

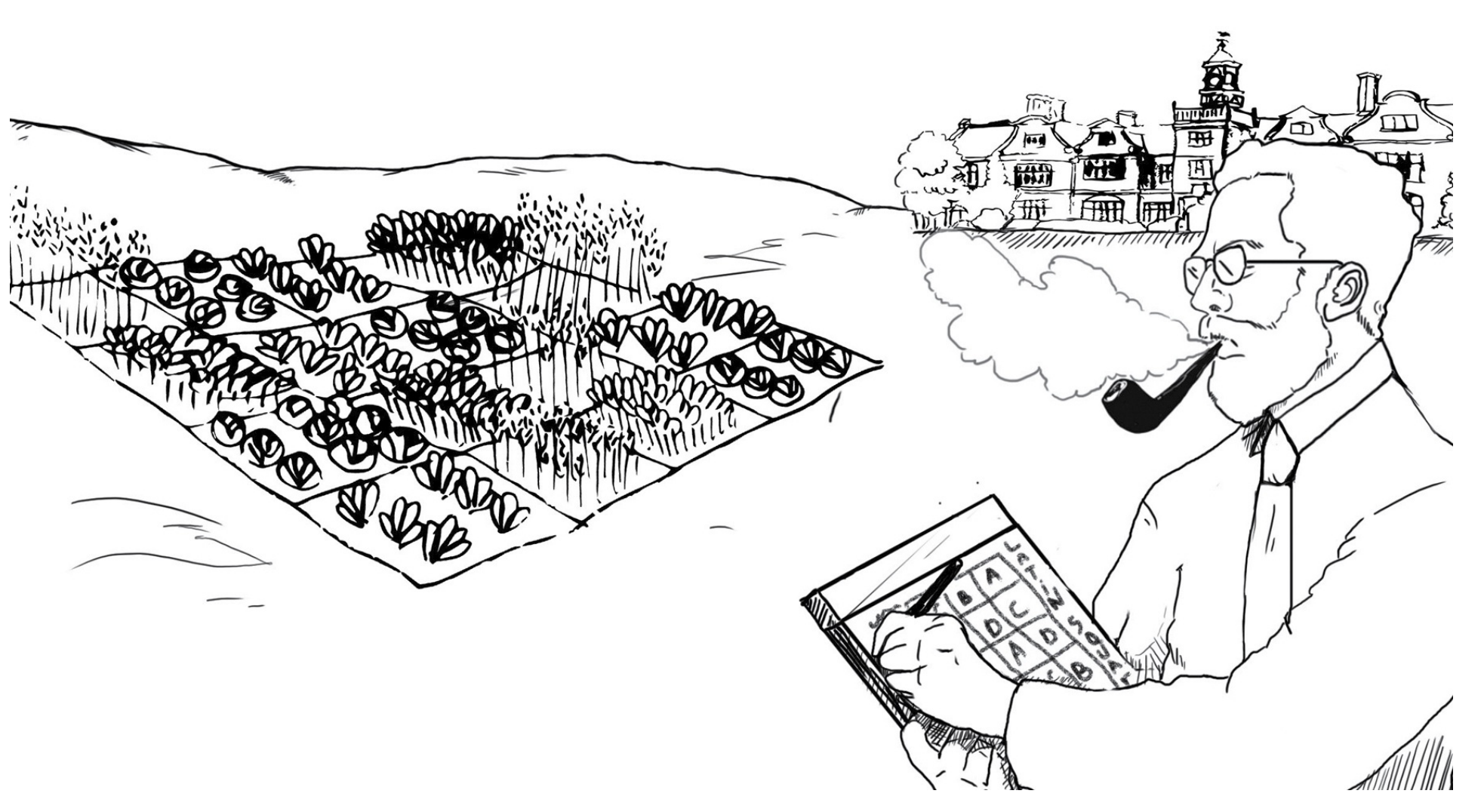

In the early 20th century, a scientist, R.A. Fisher wanted to study the impact of various fertilizers on the yield of his crops. Fisher started by neatly splitting his field into two parts and applying the fertilizer to only one. Fisher soon realized that reality was uncontrolled and random. The two halves of his land were different from each other in numerous regards. Both were receiving a different amount of sunlight, had a different soil quality, and also had variations in the water distribution (the confounders). With such vast differences in the underlying properties of the two halves, it was difficult to reliably estimate the impact the fertilizer was having.

To solve the problem, Fisher came up with an ingenious idea and in the process invented Randomised Control Trials (RCT), the holy grail behind modern experimentation. Fisher decided to neutralize the natural randomness in the environment by self-synthesizing randomness in his study.

Fisher divided his field into smaller squares and randomly chose the squares which would get the fertilizers and which won't. This randomization ensured that all other factors affecting the yield were evenly divided between the 'fertilizer' and the 'no fertilizer' squares. The difference in the total yield of the two groups now revealed an unbiased estimate of the causal impact that the fertilizer was having on the yield.

And that was the birth of modern randomized experimentation that has majorly governed the scientific processes for the past 100 years.

Randomization is a fundamental component of all experimentation today. Most experimental designs involve the use of a randomizer that decides which subject gets the treatment and which does not. This randomizer helps in isolating the treatment (the fertilizer) from any other factors that might be impacting the target variable (the yield). The other factors are more commonly known as the "confounders" and perfect randomization ensures that any confounding factor (such as sunshine or soil type) are evenly distributed between the control group and the treatment group. The assumption of perfect randomization is hence a core component of all modern experiments including A/B Testing.

The Need for Perfect Randomizers

A perfect randomizer that randomly spits out 0s and 1s, correlates with nothing, and hence evenly distributes all other attributes that the subjects might have. If a drug might work differently across people of different races (due to genetic differences), randomize who to add to the control group and who to add to the variation group and the randomizer will ensure an even distribution of races.

The crucial property of the randomizer that ensures the even distribution is the fact that the randomizer should not correlate with anything else even by chance. But randomization in practice has many more pitfalls than you can imagine.

Suppose that a doctor needs to administer placebos to the patients in the control group and actual medicines to the patient in the variation group, it might be the case that the doctor unknowingly lets off subtle cues that impact the behavior of patients differently in the control group and the variation group. In that case, you will be biasing the results. Blind studies usually ensure that the patients do not know which treatment are they being given. Double-blind studies ensure that even the experimenters do not know which patients are in control and which are in variation. The doctor in the featured cartoon of this post is trying to implement a double-blind study with a faulty understanding of double-blind experiments (although the approach seems to satisfy the theoretical purpose).

Perfect Randomizers theoretically distribute all confounding variables equivalently between the control and the variation. This is a key requirement of all experiments for the results to be accurate. Hence, the assumption of perfect randomization is necessary for all experiments. If the randomizer by chance correlates with an attribute that impacts the target variable, the distribution of that attribute in the control and the variation would be uneven and the results of the study will be flawed.

But as it happens theoretically true randomization is not possible in the real world, only pseudo-randomization can be achieved.

True Randomization and Pseudo Randomization

It doesn't seem like generating random numbers is very difficult. You can simply teach a parrot to mindlessly recite a sequence of 0s and 1s. A layman would consider that to be perfectly random because they would not expect the pattern to correlate with an attribute of the subjects. That is too unlikely. But, statistically, the sequence is far from random. Statistically, the stream of numbers that the parrot is spitting out is not random at all, it is arbitrary.

Believe it or not, there is a huge difference between random and arbitrary. Randomness guarantees some properties that arbitrariness cannot. If you randomly generate 0s and 1s, 1000 times, randomness guarantees that there is a very thin chance that there will be 400 - 0s and 600 - 1s. Randomness guarantees that there is a 99% chance that numbers will be distributed between 460 and 540. Can arbitrariness guarantee that? What if the parrot says more 1s just because they are easier to say?

All statistics work on the guarantee of randomness, not on the guarantee of arbitrariness. Modern computers have given us numerous ways to generate random numbers from various distributions of our choice. Many mathematical algorithms have been developed that apply complex procedures that guarantee (with some assumptions) that the output will follow perfect randomization.

But all mathematical algorithms for randomness need to start with a seed. Or else no matter how complex the procedure might be, it still will be a deterministic (non-random) set of instructions that generate the same output. One might say that use the random number generated in the previous instance as the seed. The seed will be random in that case. But once you give it a thought, you soon realize that it is a cascading problem no matter how many steps you imagine. Eventually, you need to start with something. That something is forced to be arbitrary by nature.

Most algorithms pick up a function of time in milliseconds to start with the seed. They then draw out an independent chain of random numbers by using the generated numbers as the seed for subsequent iterations. Most implementations allow you to set a seed yourself as well. Usually, it is not a very big problem and computer-based random-number generators work close to fine. But theoretically, the problem always exists. Any random number generator is somewhere connected to something arbitrary. Something arbitrary is not random, it can always be estimated if we can record and process all minute variables involved in the generation of that arbitrary number (a fundamental contradiction to randomness).

All random number generators in practice are pseudo-randomizers and not true-randomizers. This is because theoretically there is no scope of randomness in physics as long as you don't start to observe quantum bits that Heisenberg proved were theoretically random. All mathematical algorithms for randomness are hence hinged on arbitrarity somewhere. Although randomizers work fine in practice, you always need to be sure that due to some error they don't end up correlating with something in the study.

Randomization in Modern A/B Tests

Modern A/B Testing engines use advanced randomizers for assigning visitors to control and variation usually in the form of a hash function that takes some meaningful seed and tries to ensure the following properties.

- The visitors should be equally likely to land in the control group or the variation group.

- A visitor once assigned to a group should be assigned to the same group if they revisit the experiment.

- Visitor allocation between different experiments should not correlate with each other.

(The above conditions have been listed in the paper by Fabijan et al. on Sample Ratio Mismatch.)

While the first two are still easy to manage, the third condition becomes hard to manage for organizations running more than 10,000 experiments every year. At this rate, even by chance, some tests tend to correlate and hence compromise the assumption of randomization in A/B tests.

To handle subtle issues in randomization that might happen once in a while in large experimentation organizations, a Sample Ratio Mismatch (SRM) is used as a safeguard. An SRM checks if the number of visitors in the control and variation are close to the ratio assigned in the randomizer (usually 50-50). If not, it generates an alert. An SRM is a symptom of many test setup issues and usually suggests that the test needs to be debugged.

If you are a large experimentation organization, once in a while the assumption of randomization might be broken due to various reasons beyond your control/expectation. It is usually a good idea to augment your experiments with a Sample Ratio Mismatch checker that can detect and alert if something looks fishy with your test.

Conclusion

Understanding randomization and why it matters is the bread and butter of a statistician. Statistics was born because the world is random and deterministic concepts of mathematics fail to accurately describe the real world. The real world is random and statistics provide the tools to navigate through uncertainty. But the biggest accomplishment of statistics has been to realize that randomization is a double-edged sword. It makes the world difficult to understand but also provides the power to tackle the most difficult problems of uncertainty.

Casinos make money because randomness guarantees them that if maximum bet sizes are fixed then profits cannot be below a certain amount when enough games are played. Close inspections of even a random set of people at the airport will be able to ensure that a major proportion of people refrain from getting anything illegal to the airport. Finally, stock market strategies work because randomly diversifying your portfolio ensures that when one part of your portfolio loses money, there is a chance that other stocks are making money to cancel out the loss.

Randomization is like that neighbor's dog that barks fiercely at you if you are afraid of it. Once you understand it and get your way with it, it wags its tail and becomes a friend forever.