Effect-Size Sample-Size Tradeoff: A Counter-Intuition

Context: This is the fourth article in the series, The Statistical Nuances in Experimentation. The parent blog will give you a broader context to the concepts discussed in this article.

When a budding digital experimenter runs her first experiment, she probably hopes to make a change that will have a large impact on her goal (and on her career). When in the first few hours she does not see an increased uplift, she probably feels disheartened and rushes to end the experiment if she observes a loss of a few conversions. She iteratively tries our various new ideas changing the colors of the button, the text of the banner, and the images of the homepage just to land on that goldmine that will make her a star in her organization. However, the truth is great ideas are few and do not come by that easily. Most ideas are mediocre and they say you have to quickly kiss a lot of frogs to find your prince.

In the search for impactful ideas that are so difficult to find, it is easy to develop the intuition that impactful ideas will also be the ones that will be the hardest to test. Similarly on the other hand, in the journey to find good ideas, one expects to quickly iterate over all bad ideas throwing them out as fast as possible. But the truth is that life and statistics both are counter-intuitive in that regard.

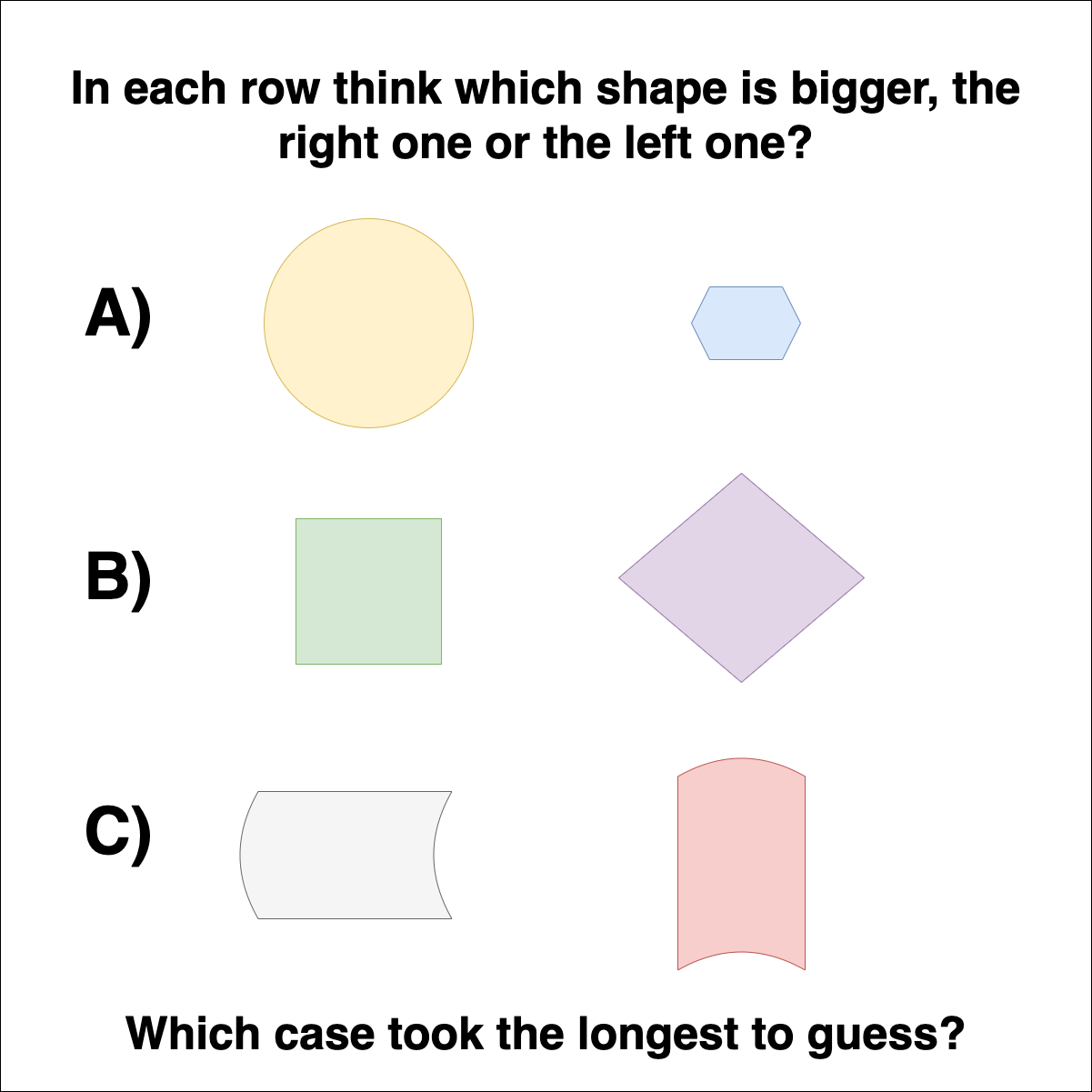

A fundamental truth of experimentation is that changes which have the largest impact are also those that can be detected in the smallest sample sizes. On the other-hand, the changes that have a smaller impact are those that take the largest sample sizes to be deemed as significant. Most experimenters do not realise this when they start testing and hence end up abandoning a lot of ideas that have a smaller but significant impact.

Metaphorically speaking, in the search for diamonds. the true gem probably does not take very long to be identified once you find it because it shines out from the rest. If this still doesn't make sense to you intuitively, observe the image below.

Implication in Experimentation

The intuition I explain above has a large impact on experimentation in general. The required sample sizes of an experiment are largely dependent on the minimum effect you expect to detect in the experiment. Statisticians have formalized this with the concept of a minimum detectable effect that we have explained in the article on statistical power.

As an experimenter it is handy to know that when you are small and starting out, testing smaller improvements are a luxury that you cannot afford. Larger improvements are probably the only ones you will be able to test but on the brigher side you have a better chance at finding them too. This is because you are new and your website probably has a lot of flaws that can be removed to reveal a larger conversion rate. Websites with a lot of traffic have already been tuned so much that finding a truly great idea is very difficult in that arena.

Incremental improvements might not be something that you want, but it helps to understand that testing and making money off incremental improvements is a luxury that you earn with an increasing amount of traffic. Higher traffic allows you to test out finely nuanced changes and if they work they generate loads of revenue because of your large visitor base.

A great example of such a luxury is the famous "41 shades of blue" experiment that was led by Marissa Meyer at Google in 2009, to find the right shade of blue that elicits the highest conversion rate on ad links. The winner of the experiment increased Google's revenue by a whopping $200 million a year. If you are small, it is great to be inspired by these stories but don't be carried away, it is a long way before you can even detect such changes.

Guardrail Experimentation

While the story up till now might be a bit sad to budding experimenters, there is a whole different arena of experimentation where this intuition comes in handy for smaller websites. Has it ever struck you that you need not always test to improve a metric, but sometimes you can also test to maintain the status quo?

When mature companies launch a change they often define good guardrails that should not be impacted. For instance, a company launching a design change on their website might want to ensure that the page-load time does not increase in the process of change. These metrics are called guardrail metrics and often they are defined as secondary metrics of a test. These are the metrics that are not targeted to be improved but rather to be maintained as they are.

The effect size-sample size tradeoff plays a very fortunous role in guardrai experimentation. Changes that have a smaller impact on a guardrail metric might not be detected in a few samples, but changes that hurt the guardrail metrics with a large margin are detected very fast. Larger companies set up automatic experiment-shutdown if a guardrail metric is hurt which is usually quick to be detected. This way very less visitors see the hazardous experiment and disaster is automatically averted.

One good piece of advice if you are a smaller company is to flip the use case of experimentation. Don't necessarily test only to measure improvements, but try out running tests to ensure that important metrics are not being hurt. Good ideas might be hard to come by, but bad ideas can be successfully averted.

The way ahead

As an experimenter, the truth is that most ideas that you will generate will be mediocre. You will probably sail on the level of mediocrity that your sample sizes permit for you. But it is not a bad thing. Stories of hugely successful ideas are inspiring but rare. The more realistic path towards greatness goes through various incremental improvements that you can iteratively test.

In the next article, I explain an interesting concept that is still quite unpopular in experimentation. The Region of Practical Equivalence (ROPE) lets you define a region of improvements that do not make sense from an implementation point of view. The concept is similar to MDE but yet has nuanced differences that are worth understanding.